AI: The Path of the Future or Industry Hype?

Artificial intelligence (AI) — the science of teaching a machine how to “think” — has its roots in the 1950s. But until recently, it was considered a niche that was reserved for academics and government-sponsored research programs. And even today, AI’s overarching vision — building a machine that can exhibit the general intelligence of a human being — remains aspirational.

Nonetheless, companies are now racing to integrate AI into everything from self-driving cars to diagnosis of medical disease. What has driven AI back to the forefront is the emergence of sensors, big data, and computational horsepower. All of these factors have contributed to seismic advances in machine learning — teaching computers to identify patterns and make predictions by analyzing vast amounts of data rather than by following a specific program.

Technology Background

The most promising machine learning systems are powered by deep learning — a machine learning technique that uses big data to train computers to extract and evaluate complex hierarchical relationships from raw data (such as labelled images or speech) using progressive refinement through many sequential computational layers. These advanced systems are already all around us:

- Natural speech recognition & intelligent agents (e.g., Apple Siri, Microsoft Cortana, and Amazon Alexa)

- Photo recognition (e.g., Google images and Apple iCloud)

- Advanced Driver Assistance Systems (ADAS) installed in the 2018 Audi A8 and Cadillac CT6 to support “Level 3” autonomous driving (limited self-driving with human backup)

- Biometric security systems (e.g., Apple Face ID)

- Medical imaging (e.g., Enlitic’s tumor detection, Arterys’s blood flow quantification and visualization, and early detection of diabetic retinopathy)

- Manufacturing and robotics

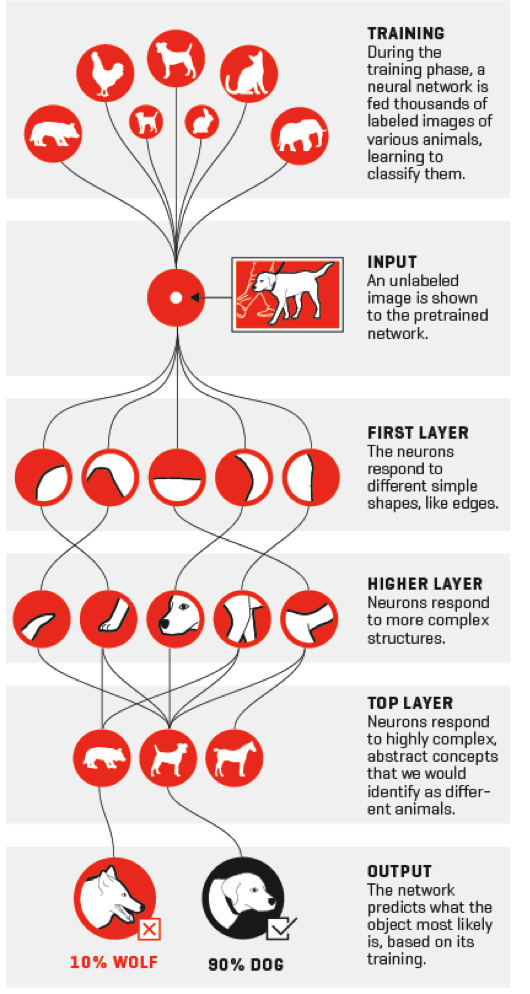

In contrast to more traditional techniques, which apply a predetermined set of rules to distinguish between objects (e.g., a fox and a dog) based upon expected differences in their features (e.g., the shape of their ears or bushiness of their coats), deep-learning exploits big data to teach computers by example. The diagram below is illustrative of how this all works at a high level. The training and classification process occurs on a computer called a “neural net,” which is composed of a number of simple computing nodes called “neurons” organized in successive stages called “layers.” Each neuron responds to different features, and provides its result to neurons in a successive layer that process higher order features, and so on. During the classification “training” phase, the computer is fed with many examples (in this case, labelled images of different types of animals), and the neural net is taught how to classify images by iteratively adjusting the mathematical response of each individual neuron to its respective input feature until the error of predicting the labelled examples has been minimized. After the training phase, the computer can then classify unlabeled images. Mispredictions can be used to further refine the computer’s training; in other words, the computer is always learning.

(Source: http://fortune.com/ai-artificial-intelligence-deep-machine-learning/.)

AI Software and the IP Landscape

Many companies and universities have open-sourced or otherwise contributed a substantial amount of deep-learning software to the public. Caffe (UC Berkeley), TensorFlow (Google), and Torch are among the popular software frameworks. Nonetheless, it is undoubtable that every major player has its own proprietary techniques.

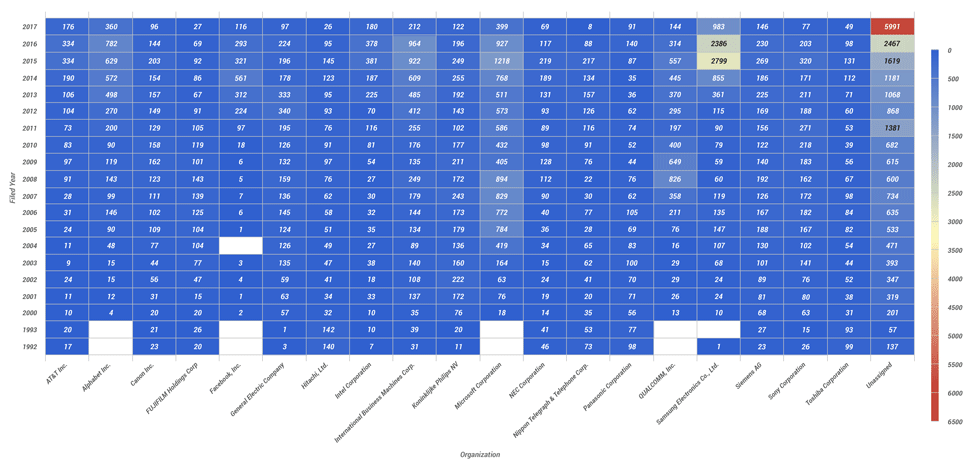

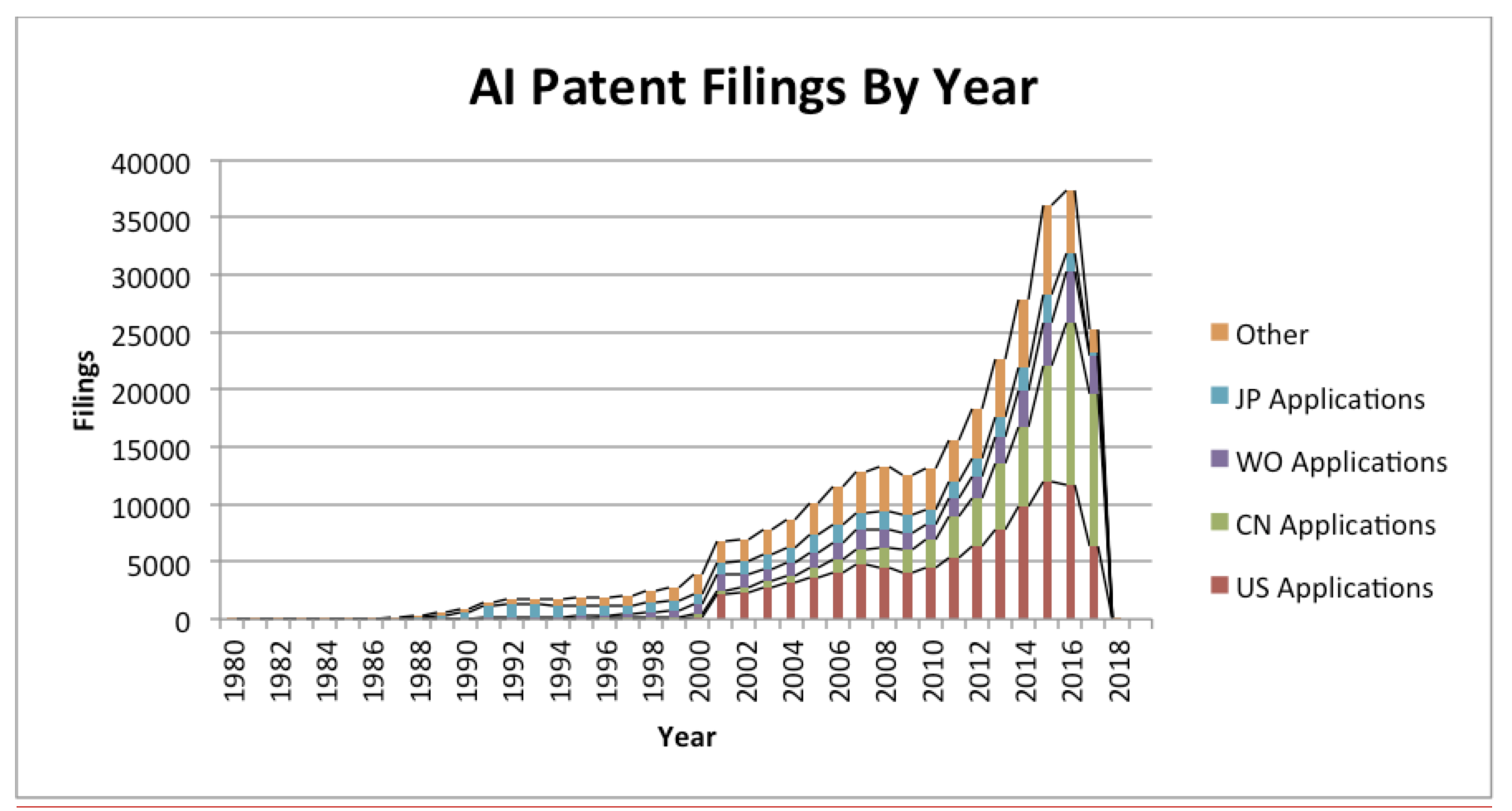

AI-based innovations have been the subject of USPTO filings for many years now and, as illustrated below, companies operating in this space have traditionally executed on an aggressive IP protection strategy.

However, because these deep learning algorithms are perceived to be part of the software arts, the Alice decision and the ensuing uncertainty about the patentability of software has led to an abrupt decrease in U.S. filings as depicted below.

This signals that companies are not receiving sufficient counsel to confidently file patentable inventions in the AI space. Companies innovating in the area of AI should know that the Federal Circuit has now provided enough guidance to allow for patent applicants to direct claims to subject matter which will reliably survive Alice challenges. In short, AI claims that are crafted with enough care and precision won’t fail under section 101 Alice challenges if the patent discloses and claims a technical solution to a technical problem using a non-conventional combination of elements. The Mintz Levin team has written extensively about the line of Alice decisions and has deep experience securing and enforcing patent rights in view of these issues. Most recently in Finjan, Inc. v. Blue Coat Systems, Inc., 2018 U.S. App. LEXIS 601 (Fed. Cir. Jan. 10, 2018), the Federal Circuit confirmed its previous holdings from the Alice line of cases that “software-based innovations can make ‘non-abstract improvements to computer technology’ and be deemed patent-eligible subject matter[.]” Finjan, 2018 U.S. App. LEXIS 601 at *8 (quoting Enfish, LLC v. Microsoft Corp., 822 F.3d 1327, 1335-36 (Fed. Cir. 2016)). However, patentees need to continue to be mindful of providing sufficient disclosure of the way in which the claimed steps accomplish an advantageous result. Id. at *10-12.

AI Hardware and Highly Parallel Processors

The hardware platform that provides the computational layers is called a “neural net.” Graphics processing units or GPUs – computer hardware devices that were traditionally used to push pixels out to a screen to render 3D graphics – are a natural fit. Among other reasons, unlike central processing units or CPUs, which typically contain a limited number of complex computational resources, GPUs typically contain a network of hundreds or thousands of highly parallel single input multiple data (SIMD) processors that are organized in a way that is conducive to running deep learning algorithms.

While the goals of deep learning and 3D graphics processing are different, the underlying GPU hardware architectures that they use are similar if not identical to the architectures that were at issue in recent ITC investigations that the Mintz Levin team brought on behalf of its clients Graphics Properties Holdings (formerly Silicon Graphics), Advanced Silicon Technology, and Advanced Micro Devices (AMD). The key differentiator is that deep learning applications do not use all of the 3D graphics hardware. Rather, deep learning mainly makes use of the GPUs’ compute units. But GPUs often contain additional circuitry that are specialized to accelerate the process of 3D graphics rendering, such as “fixed-function” triangle setup units, scan converters, rasterizers, and texture units, and have limited utility to deep learning.

Over the past several years, a new class of hardware accelerators has emerged. These devices — coined “neural processing units” or NPUs — are similar to GPUs but do not have the added overhead of fixed function GPU circuitry and are optimized for AI in hardware to handle dot product math and matrix operations using lower precision numbers. However, unlike GPUs, which allocate compute resources on demand to service graphics and AI workloads to minimize idle time, NPUs are dedicated to just one task — AI processing. As a result, choosing the optimum hardware solution largely depends on the specific use case. Looking further into the future, the way in which machine learning algorithms (and the hardware architectures of the GPUs or NPUs that run these algorithms) evolve with time will also factor into the selection process.

Powered by AI: Our Path to the Future or Industry Hype?

Beyond refining the algorithms and the hardware, technology companies are competing to be first to market with meaningful new features that will truly differentiate them over their competitors. To be the winner requires much more than slapping a “powered by AI” label on the end-product. Among other things, it involves sifting through endless possibilities of how new sensor technologies, computational resources, and deep learning might be integrated together. Apple’s latest face unlock technology provides a prime example of this point. No less than 6 years ago (forever in the smart phone industry), Android-based devices were shipping with face unlock technology. Very much like Apple’s recently introduced Face ID, Android’s face unlock was powered by deep learning techniques. But the nearly universal consensus among Android users was that face unlock was nothing more than a marketing gimmick; it did not unlock well in low-lighting conditions, and in proper lighting conditions, it was vulnerable to being duped by a picture. Apple’s iPhone X caught the competition off guard. Having solved these problems by combining deep learning with more localized computational resources (for low latency) and a much more sophisticated set of sensors (including an infrared camera, a flood illuminator, a regular camera, and a dot projector), Apple transformed what was once a marketing gimmick into an actual selling point, sending every major company scurrying to develop and release “real” face unlock technology in their next-generation product.

Conclusion

In sum, while it is less than certain how far the technology will ultimately take us, it is clear that the field of AI is young, disruptive, and here to stay.

If you have any questions about this topic, please contact the authors or your principal Mintz Levin attorney.

With Software Patents and Means-Plus-Function, “Structure” Takes On a New Meaning

April 17, 2018| Blog